Time series analysis can help to  understand how an asset, security, or economic variable change over time.

In mathematics, a collection of data points that have been listed, graphed, or indexed according to time are captured at a series of equally spaced moments in time. As a result, it is a collection of discrete data. Ocean tide heights, sunspot counts, and the ending value of the Dow Jones Industrial Average each day are a few examples.

What exactly is a time series?

Time series analysis refers to techniques for deriving useful statistics and other aspects of data through analysis. Forecasting is the method of using a model to predict future values based on values that have already been observed. Although regression analysis is frequently used to examine links between one or more separate time series, this form of analysis is not typically referred to as “time series analysis,” which specifically refers to relationships between various time points within a single series.

Data from time series contain a built-in temporal ordering. Analysis differs from cross-sectional studies in that there is no inherent ordering of observations (as in the case of relating an individual’s income to their degree of education, where the data might be input in any order). Additionally, time series analysis differs from spatial data analysis, where observations often refer to specific physical places (e.g. accounting for house prices by the location as well as the intrinsic characteristics of the houses). In general, a stochastic model for the time series will account for the fact of observations that are close in time are more likely to be connected than those that are farther apart. Additionally, time series models frequently use the one-way ordering of time, which expresses values for a particular period as having some relationship to previous values rather than future price (see time reversibility). A run chart is frequently used to plot a time series (which is a temporal line chart). Time series are used in many areas of applied science and engineering that use temporal measurements, including statistics, signal processing, pattern recognition, econometrics, mathematical finance, weather forecasting, earthquake prediction, control engineering, astronomy, and communications engineering.

What is the purpose of a time series?

It is a data set that tracks the evolution of a sample over time. A time series, in particular, allows one to see what factors influence certain variables from one period to the next. Time series analysis can help you understand how an asset, security, or economic variable changes over time

What are the different models to analyze Time series?

Its techniques can be subdivided into

1. frequency-domain approaches:

A frequency-domain graph depicts how much of a signal is contained within each frequency band over a range of frequencies. In this method, wavelet analysis and spectrum analysis are included.

1. Time-domain methods:

A time domain analysis is a time-based examination of signals transmitted, mathematical functions, or time series data. A time-domain graph shows how a signal changes over time.

Additionally, there are parametric and non-parametric time series analysis methodologies.

The parametric approaches:

These approaches presuppose that the stationary stochastic process has a certain structure that can be adequately represented by a limited set of parameters. (for example, using an autoregressive or moving average model). The goal of these methods is to estimate the model’s parameters, which describe the stochastic process. Rather than assuming that a process has a specific structure, non-parametric techniques clearly estimate its covariance or even the process spectra.

Its analysis techniques can also be classified as linear, non-linear, univariate, and multivariate.

What is Panel data?

It is a variety of panel data. A time series data set is a single-dimensional panel, whereas panel data is the general class of multidimensional data sets (as is a cross-sectional dataset). Both panel data and time series data might be present in a data set. A candidate for a time series set in the time data field is the answer. It is a candidate for panel data if a time data field plus an additional, non-time-related identifier are needed to determine a record’s uniqueness (such as a student ID, stock symbol, or country code). If the non-time identifier serves as the point of differentiation, then the data set is a potential cross-sectional data set.

What is exploratory analysis?

A line chart is a simple tool for visually inspecting a normal time series. On the right, a sample spreadsheet-generated graphic depicting the prevalence of tuberculosis in the United States is displayed. After standardizing the number of instances to a rate per 100,000, the percentage change in this rate over time was computed. The nearly continuously falling line indicates that the incidence of TB was down in the majority of years, but the percentage change in this rate might have fluctuated by up to 10%, with “surges” in 1975 and the early 1990s. Two-time series can be compared in one graph by using both vertical axes.

Finding the shape of intriguing patterns and coming up with an explanation for them are the two difficulties that corporate data analysts encounter when performing exploratory time series analysis. The solutions to these problems can be found in visual technologies that display time series data as heat map matrices.

Other techniques include:

- Analyzing autocorrelation to look at serial dependence

- using spectral analysis to look at the cyclic activity that is not always seasonal Sunspot activity, for instance, varies during 11-year periods. Celestial phenomena, weather patterns, neurological activity, commodity pricing, and economic activity are a few additional examples that are frequently used.

- Seeing the trend estimate & decomposition for the division into components representing trend, seasonality, slow & fast variation, & cyclical irregularity.

What is curve fitting?

Curve fitting is the process of creating a curve, or mathematical function, that has the best fit to a set of data points, sometimes subject to limits.

Smoothing and interpolation are two methods for fitting curves to data. Smoothing involves creating a “smooth” function that roughly fits the data, whereas interpolation requires a precise fit to the data. Regression analysis is a related topic that focuses more on statistical inference issues like the degree of uncertainty in a curve that is fitted to data seen with random errors. Fitted curves can be used to describe the associations between two or more variables, to infer the values of a function when no data are available, and as a tool for data visualization.

What is extrapolation?

Extrapolation is the use of a fitted curve outside the range of observed data, and it carries some risk because it might represent the construction process of the curve just as much as it might reflect the data.

Extrapolation is indeed the process of estimating the value of a variable based on its relationship with some other variable outside the original observation range. Extrapolation is comparable to interpolation in that it yields estimates among known data, but extrapolation is more imprecise and more likely to yield meaningless findings.

What is interpolation?

Economic time series are created by interpolating between data (“benchmarks”) for later and earlier periods to estimate certain components for specific dates. Interpolation is the estimation of an unknown factor between two known quantities (historical data) or the drawing of inferences on the absence of information from already available information (“reading between the lines”). When the data surrounding this missing data is accessible and its trends and seasonality, including longer-term cycles, are understood, interpolation can be useful. Utilizing a connected series with all pertinent dates is a common way to accomplish this. Instead, piecewise polynomial values are fitted into time intervals so that they fit together smoothly using polynomial interpolation or spline interpolation. The approximation of a complex function by a simple function is a separate issue that is directly connected to interpolation (also called regression). Polynomial regression provides a single polynomial that describes the complete data set, which is the primary distinction between polynomial regression and interpolation. To describe the data set, spline interpolation produces a piecewise continuous function made up of several polynomials.

How to forecast and predict using time series

Prediction in statistics is a subset of statistical inference. The predictive inference is one method for making such inferences, but predictions can be made using any of the many statistical inference methods. In fact, one definition of statistics is that it offers a way to generalize information about a population’s sample to the entire population and to other people that are related, which isn’t always the same as prediction over time. Forecasting is the process of transferring information across time, frequently to specified moments in time.

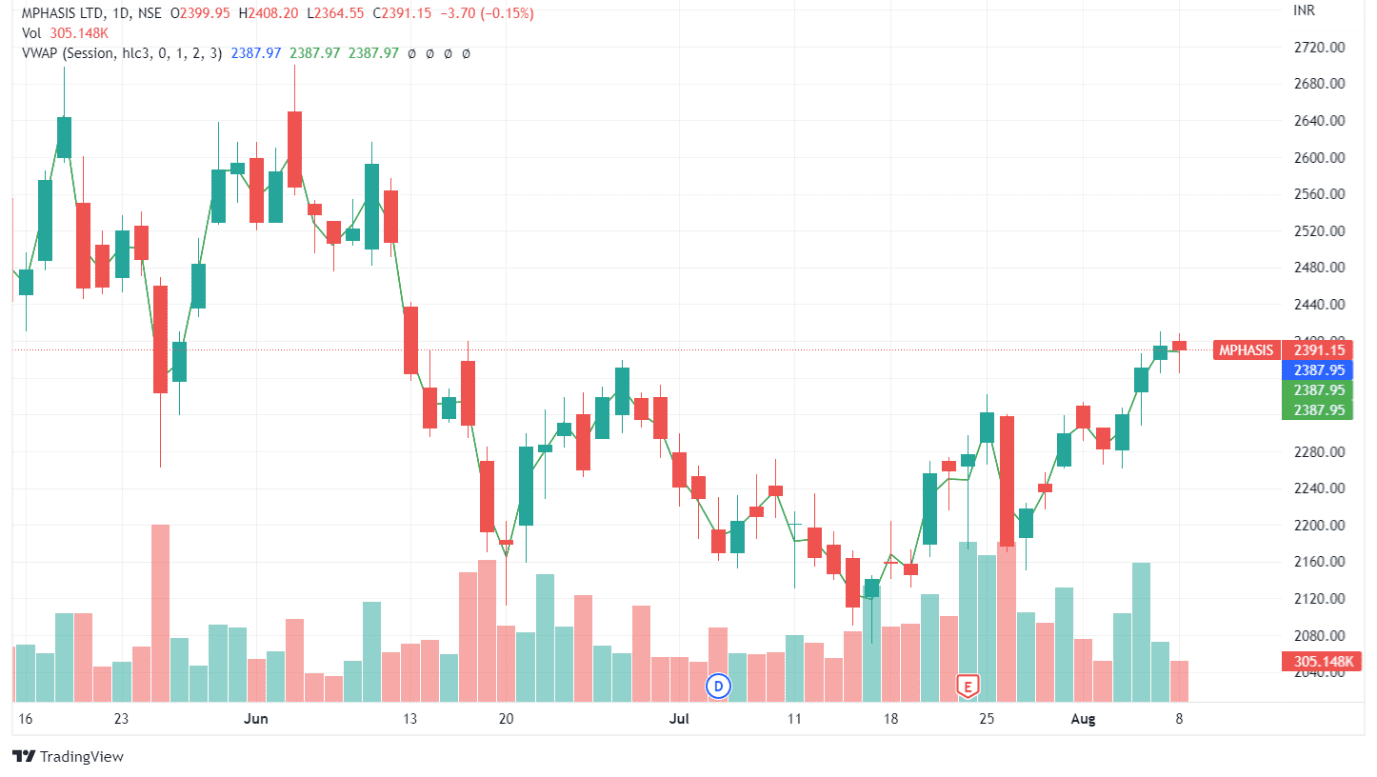

How to use time series in finance?

It is a collection of observations on the outcomes of a variable over time, such as quarterly sales for a specific company over the last five years or daily returns on a traded security. It can be used to make investment decisions using time series data.

Begin by describing typical difficulties encountered when applying the linear regression model to time-series data. Present linear and log-linear trend models, which describe the value and natural log of the value of a time series as a linear function of time, respectively. Present an autoregressive time-series model that explains a time series’ current value in terms of one or more lags. These models are some of the most widely used in investing.

Comments are closed.